What is glm-4v-9b?

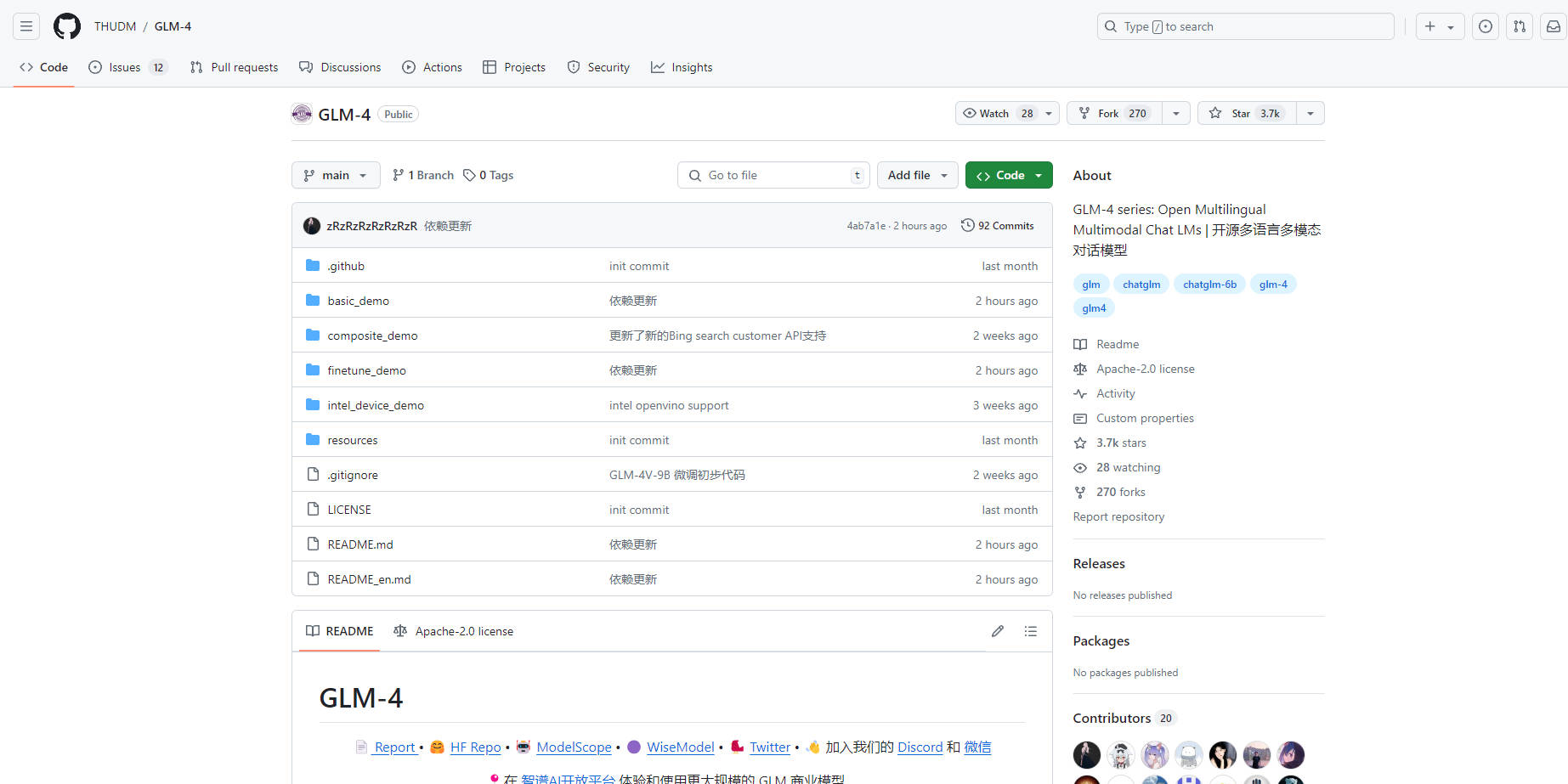

GLM-4V-9B, developed by Tsinghua University, is a state-of-the-art multimodal language model that excels in various benchmarks, particularly in optical character recognition (OCR). It belongs to the GLM-4 series, which also includes chat-oriented models. The key feature of GLM-4V-9B is its added visual understanding capabilities, enabling it to perform tasks like image description, visual question answering, and multimodal reasoning effectively.

Key Features

Multimodal Understanding and Generation:GLM-4V-9B can generate detailed and coherent descriptions of images, answer questions about visual content, and perform tasks like visual reasoning and OCR. This makes it adept at analyzing complex charts or diagrams and summarizing key information.

Cross-Language Support:The model supports both Chinese and English languages, making it versatile for a global user base. Its ability to handle multiple languages enhances its applicability in diverse settings.

Advanced Chat and Multimodal Capabilities:With capabilities like engaging in visual and textual dialogue, GLM-4V-9B can serve as a powerful tool for developing multimodal conversational AI assistants. It can handle image captioning, visual question answering, and integrate visual and textual elements in content generation.

More information on glm-4v-9b

glm-4v-9b Alternatives

Load more Alternatives-

GLM-4-9B is the open source version of the latest generation pre-training model GLM-4 series launched by Zhipu AI.

-

ChatGLM-6B is an open CN&EN model w/ 6.2B paras (optimized for Chinese QA & dialogue for now).

-

The New Paradigm of Development Based on MaaS , Unleashing AI with our universal model service

-

-

Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.

![[dangao] [dangao]](/static/assets/comment/emotions/dangao.gif)

![[qiu] [qiu]](/static/assets/comment/emotions/qiu.gif)

![[fadou] [fadou]](/static/assets/comment/emotions/fadou.gif)

![[tiaopi] [tiaopi]](/static/assets/comment/emotions/tiaopi.gif)

![[fadai] [fadai]](/static/assets/comment/emotions/fadai.gif)

![[xinsui] [xinsui]](/static/assets/comment/emotions/xinsui.gif)

![[ruo] [ruo]](/static/assets/comment/emotions/ruo.gif)

![[jingkong] [jingkong]](/static/assets/comment/emotions/jingkong.gif)

![[quantou] [quantou]](/static/assets/comment/emotions/quantou.gif)

![[gangga] [gangga]](/static/assets/comment/emotions/gangga.gif)

![[da] [da]](/static/assets/comment/emotions/da.gif)

![[touxiao] [touxiao]](/static/assets/comment/emotions/touxiao.gif)

![[ciya] [ciya]](/static/assets/comment/emotions/ciya.gif)

![[liulei] [liulei]](/static/assets/comment/emotions/liulei.gif)

![[fendou] [fendou]](/static/assets/comment/emotions/fendou.gif)

![[kiss] [kiss]](/static/assets/comment/emotions/kiss.gif)

![[aoman] [aoman]](/static/assets/comment/emotions/aoman.gif)

![[kulou] [kulou]](/static/assets/comment/emotions/kulou.gif)

![[yueliang] [yueliang]](/static/assets/comment/emotions/yueliang.gif)

![[lenghan] [lenghan]](/static/assets/comment/emotions/lenghan.gif)

![[kun] [kun]](/static/assets/comment/emotions/kun.gif)

![[meng] [meng]](/static/assets/comment/emotions/meng.gif)

![[shenma] [shenma]](/static/assets/comment/emotions/shenma.gif)

![[peifu] [peifu]](/static/assets/comment/emotions/peifu.gif)

![[qinqin] [qinqin]](/static/assets/comment/emotions/qinqin.gif)

![[nanguo] [nanguo]](/static/assets/comment/emotions/nanguo.gif)

![[hufen] [hufen]](/static/assets/comment/emotions/hufen.gif)

![[shuai] [shuai]](/static/assets/comment/emotions/shuai.gif)

![[jingya] [jingya]](/static/assets/comment/emotions/jingya.gif)

![[cahan] [cahan]](/static/assets/comment/emotions/cahan.gif)

![[shengli] [shengli]](/static/assets/comment/emotions/shengli.gif)

![[qioudale] [qioudale]](/static/assets/comment/emotions/qioudale.gif)

![[cheer] [cheer]](/static/assets/comment/emotions/cheer.gif)

![[ketou] [ketou]](/static/assets/comment/emotions/ketou.gif)

![[shandian] [shandian]](/static/assets/comment/emotions/shandian.gif)

![[haqian] [haqian]](/static/assets/comment/emotions/haqian.gif)

![[jidong] [jidong]](/static/assets/comment/emotions/jidong.gif)

![[zaijian] [zaijian]](/static/assets/comment/emotions/zaijian.gif)

![[kafei] [kafei]](/static/assets/comment/emotions/kafei.gif)

![[love] [love]](/static/assets/comment/emotions/love.gif)

![[pizui] [pizui]](/static/assets/comment/emotions/pizui.gif)

![[huitou] [huitou]](/static/assets/comment/emotions/huitou.gif)

![[tiao] [tiao]](/static/assets/comment/emotions/tiao.gif)

![[liwu] [liwu]](/static/assets/comment/emotions/liwu.gif)

![[zhutou] [zhutou]](/static/assets/comment/emotions/zhutou.gif)

![[e] [e]](/static/assets/comment/emotions/e.gif)

![[qiang] [qiang]](/static/assets/comment/emotions/qiang.gif)

![[youtaiji] [youtaiji]](/static/assets/comment/emotions/youtaiji.gif)

![[zuohengheng] [zuohengheng]](/static/assets/comment/emotions/zuohengheng.gif)

![[huaixiao] [huaixiao]](/static/assets/comment/emotions/huaixiao.gif)

![[gouyin] [gouyin]](/static/assets/comment/emotions/gouyin.gif)

![[keai] [keai]](/static/assets/comment/emotions/keai.gif)

![[tiaosheng] [tiaosheng]](/static/assets/comment/emotions/tiaosheng.gif)

![[daku] [daku]](/static/assets/comment/emotions/daku.gif)

![[weiqu] [weiqu]](/static/assets/comment/emotions/weiqu.gif)

![[lanqiu] [lanqiu]](/static/assets/comment/emotions/lanqiu.gif)

![[zhemo] [zhemo]](/static/assets/comment/emotions/zhemo.gif)

![[xia] [xia]](/static/assets/comment/emotions/xia.gif)

![[fan] [fan]](/static/assets/comment/emotions/fan.gif)

![[yun] [yun]](/static/assets/comment/emotions/yun.gif)

![[youhengheng] [youhengheng]](/static/assets/comment/emotions/youhengheng.gif)

![[chong] [chong]](/static/assets/comment/emotions/chong.gif)

![[pijiu] [pijiu]](/static/assets/comment/emotions/pijiu.gif)

![[dajiao] [dajiao]](/static/assets/comment/emotions/dajiao.gif)

![[dao] [dao]](/static/assets/comment/emotions/dao.gif)

![[diaoxie] [diaoxie]](/static/assets/comment/emotions/diaoxie.gif)

![[liuhan] [liuhan]](/static/assets/comment/emotions/liuhan.gif)

![[haha] [haha]](/static/assets/comment/emotions/haha.gif)

![[xu] [xu]](/static/assets/comment/emotions/xu.gif)

![[zhuakuang] [zhuakuang]](/static/assets/comment/emotions/zhuakuang.gif)

![[zhuanquan] [zhuanquan]](/static/assets/comment/emotions/zhuanquan.gif)

![[no] [no]](/static/assets/comment/emotions/no.gif)

![[ok] [ok]](/static/assets/comment/emotions/ok.gif)

![[feiwen] [feiwen]](/static/assets/comment/emotions/feiwen.gif)

![[taiyang] [taiyang]](/static/assets/comment/emotions/taiyang.gif)

![[woshou] [woshou]](/static/assets/comment/emotions/woshou.gif)

![[zuqiu] [zuqiu]](/static/assets/comment/emotions/zuqiu.gif)

![[xigua] [xigua]](/static/assets/comment/emotions/xigua.gif)

![[hua] [hua]](/static/assets/comment/emotions/hua.gif)

![[tu] [tu]](/static/assets/comment/emotions/tu.gif)

![[tiaowu] [tiaowu]](/static/assets/comment/emotions/tiaowu.gif)

![[ma] [ma]](/static/assets/comment/emotions/ma.gif)

![[baiyan] [baiyan]](/static/assets/comment/emotions/baiyan.gif)

![[zhadan] [zhadan]](/static/assets/comment/emotions/zhadan.gif)

![[weixiao] [weixiao]](/static/assets/comment/emotions/weixiao.gif)

![[wen] [wen]](/static/assets/comment/emotions/wen.gif)

![[dabing] [dabing]](/static/assets/comment/emotions/dabing.gif)

![[xianwen] [xianwen]](/static/assets/comment/emotions/xianwen.gif)

![[shuijiao] [shuijiao]](/static/assets/comment/emotions/shuijiao.gif)

![[yongbao] [yongbao]](/static/assets/comment/emotions/yongbao.gif)

![[kelian] [kelian]](/static/assets/comment/emotions/kelian.gif)

![[pingpang] [pingpang]](/static/assets/comment/emotions/pingpang.gif)

![[danu] [danu]](/static/assets/comment/emotions/danu.gif)

![[geili] [geili]](/static/assets/comment/emotions/geili.gif)

![[wabi] [wabi]](/static/assets/comment/emotions/wabi.gif)

![[kuaikule] [kuaikule]](/static/assets/comment/emotions/kuaikule.gif)

![[zuotaiji] [zuotaiji]](/static/assets/comment/emotions/zuotaiji.gif)

![[tuzi] [tuzi]](/static/assets/comment/emotions/tuzi.gif)

![[bishi] [bishi]](/static/assets/comment/emotions/bishi.gif)

![[caidao] [caidao]](/static/assets/comment/emotions/caidao.gif)

![[dabian] [dabian]](/static/assets/comment/emotions/dabian.gif)

![[fanu] [fanu]](/static/assets/comment/emotions/fanu.gif)

![[guzhang] [guzhang]](/static/assets/comment/emotions/guzhang.gif)

![[se] [se]](/static/assets/comment/emotions/se.gif)

![[chajin] [chajin]](/static/assets/comment/emotions/chajin.gif)

![[bizui] [bizui]](/static/assets/comment/emotions/bizui.gif)

![[deyi] [deyi]](/static/assets/comment/emotions/deyi.gif)

![[ku] [ku]](/static/assets/comment/emotions/ku.gif)

![[huishou] [huishou]](/static/assets/comment/emotions/huishou.gif)

![[yinxian] [yinxian]](/static/assets/comment/emotions/yinxian.gif)

![[haixiu] [haixiu]](/static/assets/comment/emotions/haixiu.gif)