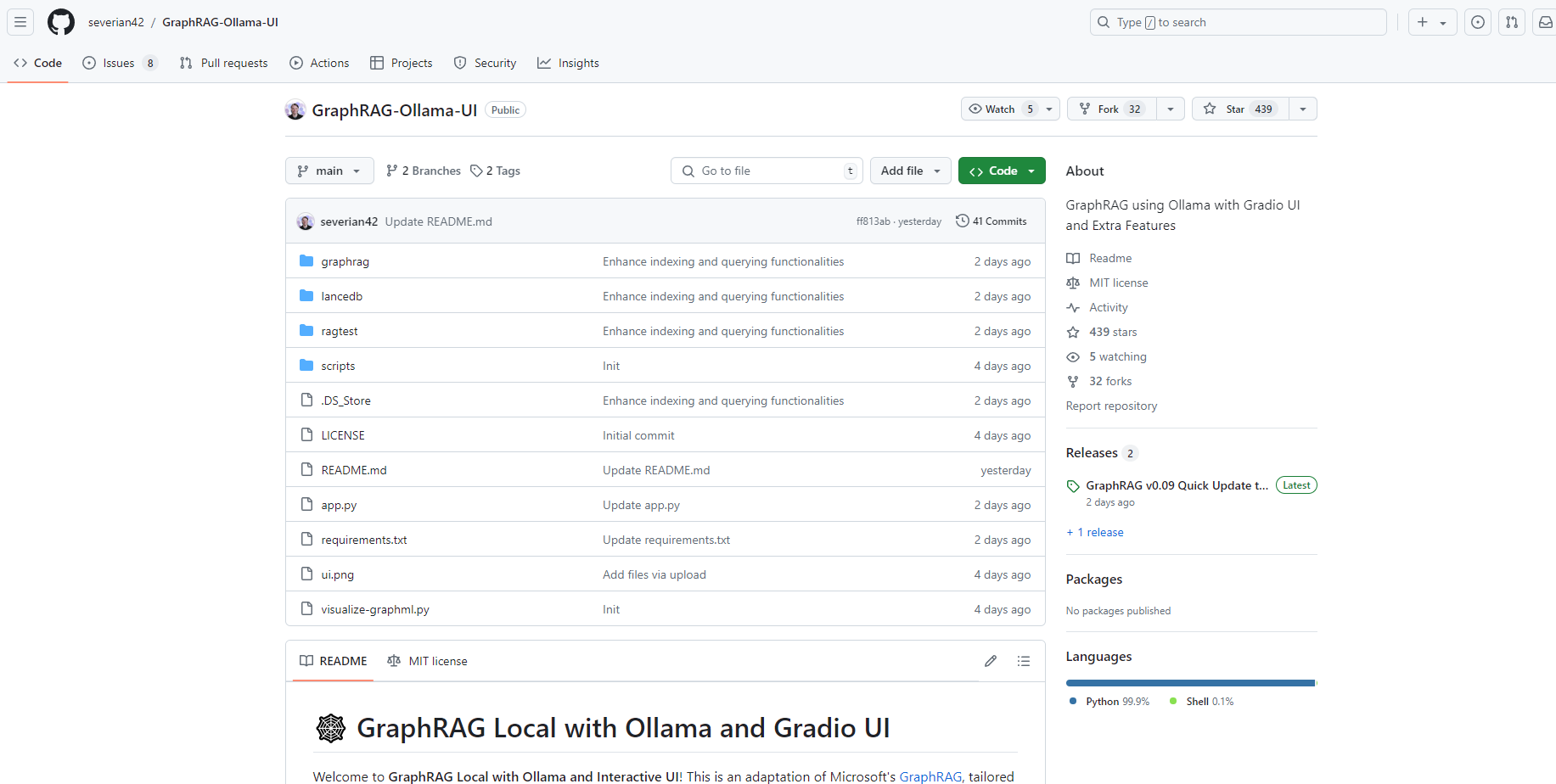

What is GraphRAG-Ollama-UI?

GraphRAG Local with Ollama and Interactive UI offers a powerful, cost-effective solution for managing and visualizing knowledge graphs using local models. This adaptation of Microsoft’s GraphRAG leverages Ollama for LLM and embedding support, providing an interactive user interface for efficient data handling and real-time graph visualization.

Key Features:

Local Model Support 🖥️

Employs Ollama for local LLM and embedding models.

Reduces dependency on expensive cloud-based AI services.

Interactive UI 🌐

User-friendly interface for managing data, running queries, and visualizing results.

Simplifies operations previously done via command-line.

Real-time Graph Visualization 📈

Utilizes Plotly for 3D visualization of knowledge graphs.

Enhances understanding and exploration of data relationships.

File and Settings Management 📁

Direct UI access for file upload, editing, and management.

Easy updates and management of GraphRAG settings.

Customization Options 🔧

Flexibility to experiment with different models in the settings.yaml file.

Supports various LLM and embedding models provided by Ollama.

Use Cases:

Research and Development 🧪

Researchers can utilize GraphRAG Local for exploring and visualizing complex datasets.

The interactive UI aids in hypothesis testing and data analysis.

Enterprise Solutions 🏢

Businesses can leverage this tool for cost-effective knowledge graph management.

Enhances data insights and decision-making processes.

Educational Purposes 🎓

Educators and students can use GraphRAG Local for teaching and learning graph-based data structures and AI concepts.

Conclusion:

GraphRAG Local with Ollama and Interactive UI is a versatile tool for anyone involved in knowledge graph management and visualization. Its local model support and user-friendly interface make it a cost-effective and efficient choice. We invite you to experience the power of GraphRAG Local and explore the possibilities it offers in managing and visualizing your data.

More information on GraphRAG-Ollama-UI

GraphRAG-Ollama-UI Alternatives

Load more Alternatives-

RLAMA is a powerful AI-driven question-answering tool for your documents, seamlessly integrating with your local Ollama models. It enables you to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to your documentation needs.

-

Streamlined and promptable Fast GraphRAG framework designed for interpretable, high-precision, agent-driven retrieval workflows.

-

Run large language models locally using Ollama. Enjoy easy installation, model customization, and seamless integration for NLP and chatbot development.

-

LightRAG is an advanced RAG system. With a graph structure for text indexing and retrieval, it outperforms existing methods in accuracy and efficiency. Offers complete answers for complex info needs.

-

Local III makes it easier than ever to use local models. With an interactive setup, you can select an inference provider, select a model, download new models, and more.